We recently discussed this paper by A. M. Ni et al. from Cohen lab. The authors investigated both neuronal and behavioral effects of attention and perceptual learning as well as the relationship between stimulus conditioned correlated variability and subjects’ performance in behavioral tasks. Through empirical studies and statistical analyses, they found a single, consistent relationship between stimulus conditioned correlated variability and behavioral performance, regardless of the time frame of stimulus conditioned correlated variability change ([2]). They further identified that subjects’ choices were predicted by certain dimensions that were aligned with the stimulus conditioned correlated variability principal component axes ([2]).

In their empirical study, they used the same behavioral tasks as described in [1]. Basically, they had two Rhesus monkeys with chronically implanted microelectrode arrays perform orientation-change detection tasks with cued attentions. The stimuli were two Gabor stimuli, one placed within the receptive field of the recorded neurons in V4 and one placed in the opposite visual hemifield. Each experimental session consists of one block of trials with attention cued to the left and another block of trials with attention cued to the right. Within each session, the orientation change happened at the cued location 80% of the time and at the uncued location 20% of the time. They used detection sensitivity as a metric for behavioral performance.

One key variable of interest is the stimulus conditioned correlated variability between pairs of units (or , which is typically referred to as noise correlation in neuroscience literature), which they defined as the Pearson correlation coefficient between the responses (namely the firing rate during the 60-260 ms time window after stimulus onset) of the two units to repeated presentations of the same stimulus. This

is essentially measuring noise correlation between the two units because the visual stimulus was kept identical.

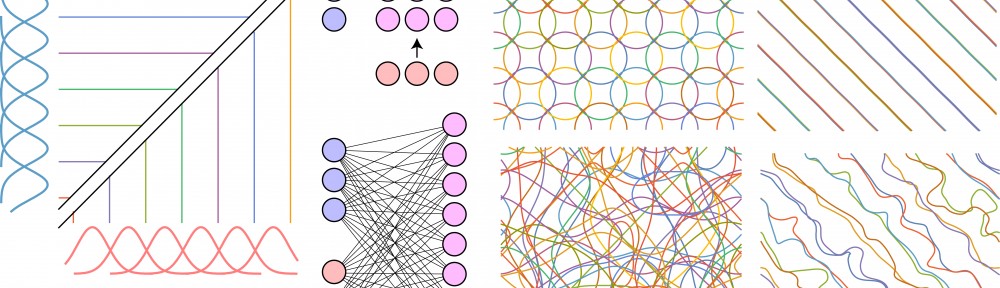

The first key observation was that behavioral performance was better in attended trials compared to unattended trials and the performance also improved over sessions with perceptual learning. Both perceptual learning and attention were associated with decreases in the mean-normalized trial-to-trial variance (Fano factor) of individual units as well as the correlated variability between pairs of units in response to repeated presentations of the same visual stimulus. Moreover, they showed that both attention and perceptual learning improved the performance of a cross-validated, optimal linear stimulus decoder which was trained to discriminate the responses of the neuronal population to the stimulus before the orientation change from the responses of the neuronal population to the changed stimulus. To examine the relationship between correlated variability and behavioral performance more directly, they performed PCA on population responses to the same repeated stimuli used to compute correlated variability. By definition, the first PC explains more of the correlated variability than any other dimensions. Their analyses showed that activity along this first PC axis had a much stronger relationship with the monkey’s behavior than it would if the monkey used an optimal stimulus decoder. The robust relationship between correlated variability and perceptual performance suggests that although attention and learning mechanisms act on different time scales, they share a common computation.

[1] Marlene R Cohen and John H R Maunsell. Attention improves performance primarily by reducing interneuronal correlations. Nature Neuroscience, 12(0):1594–1600, 2009.

[2] A. M. Ni, D. A. Ruff, J. J. Alberts, J. Symmonds, and M. R. Cohen. Learning and attention reveal a general relationship between population activity and behavior. Science, 359(6374):463– 465, 2018.